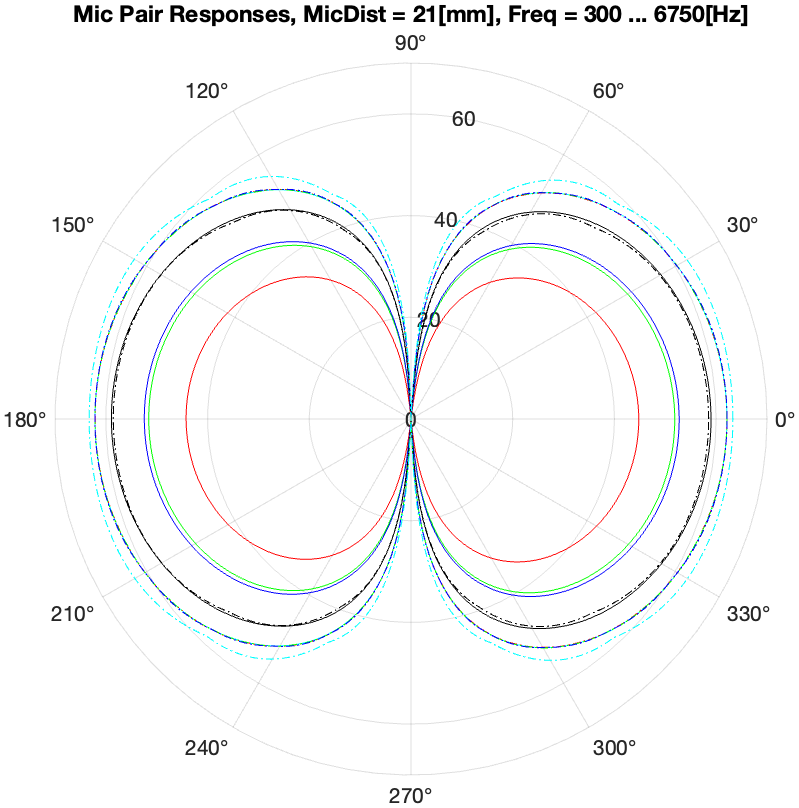

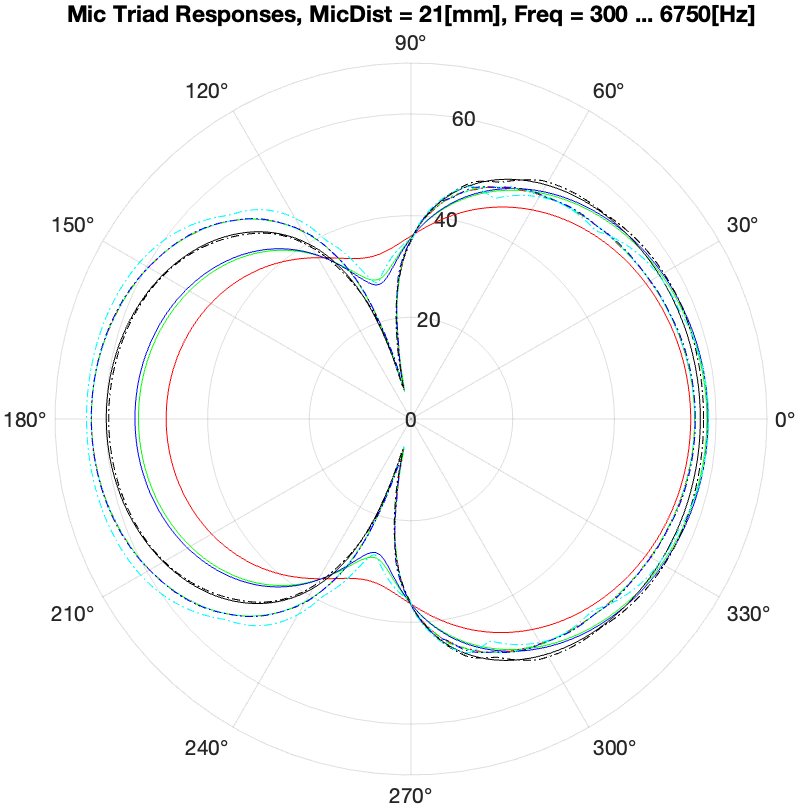

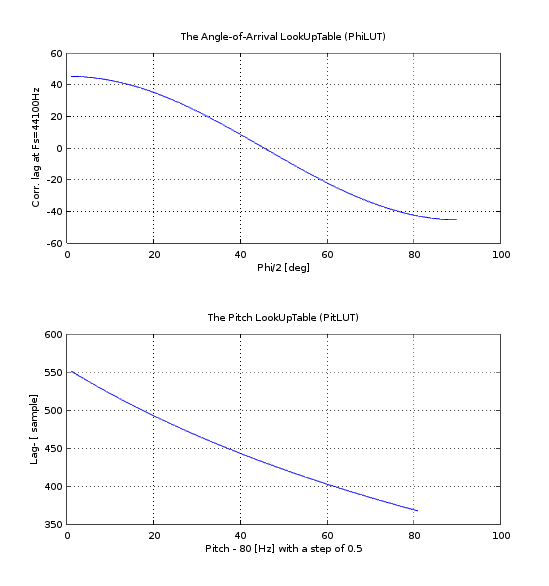

The Application of 3 mics instead of 2 for a DMA has a huge effect.

Matlab code here.

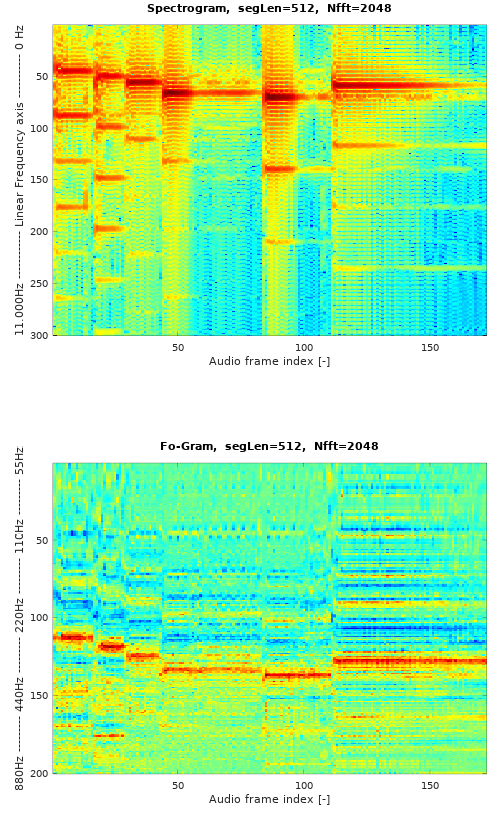

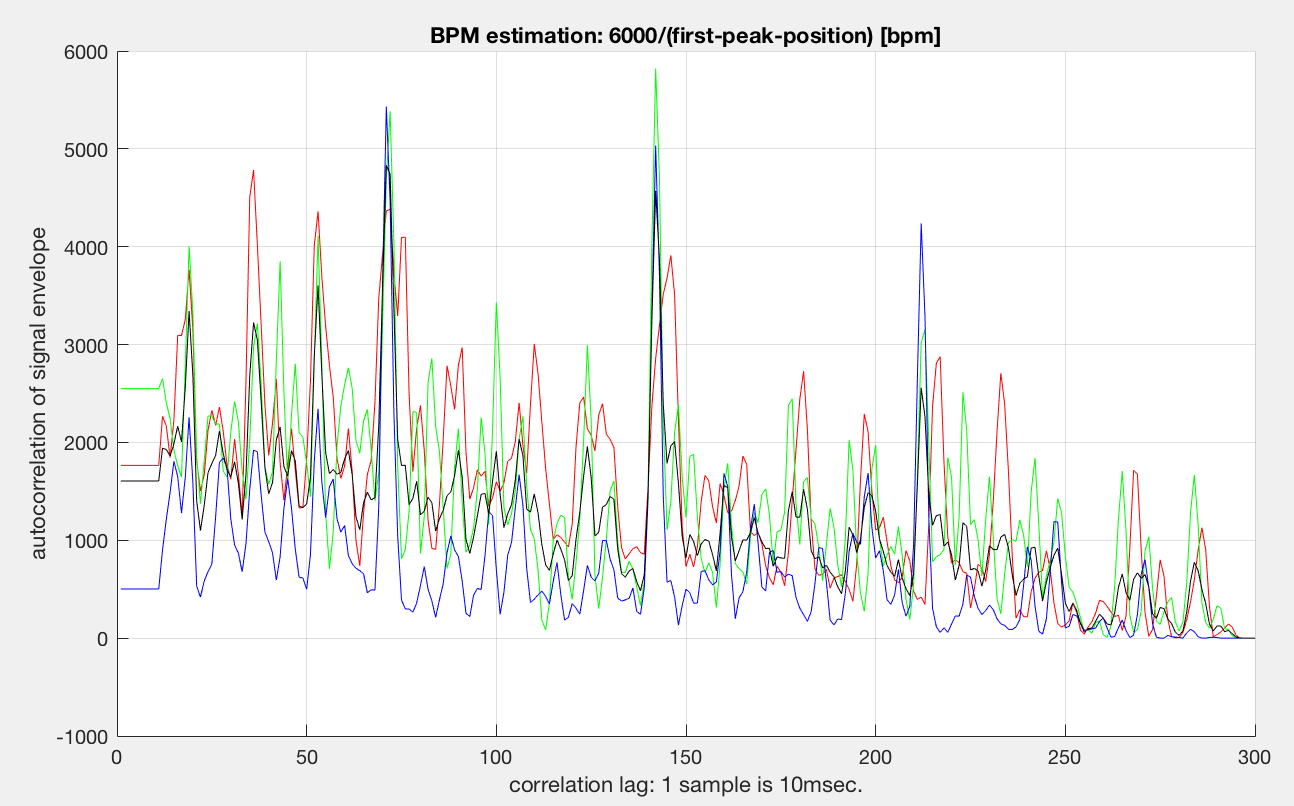

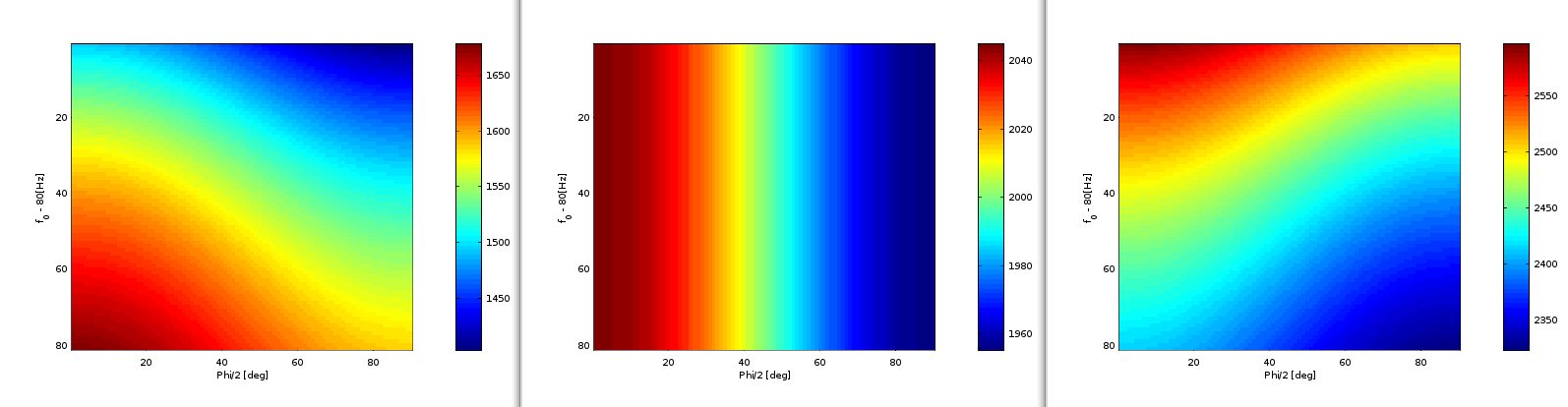

3. F0-gram above

4. Database of further audio samples.

5. Octave Code.

6. Theory of the F0-decomposition.

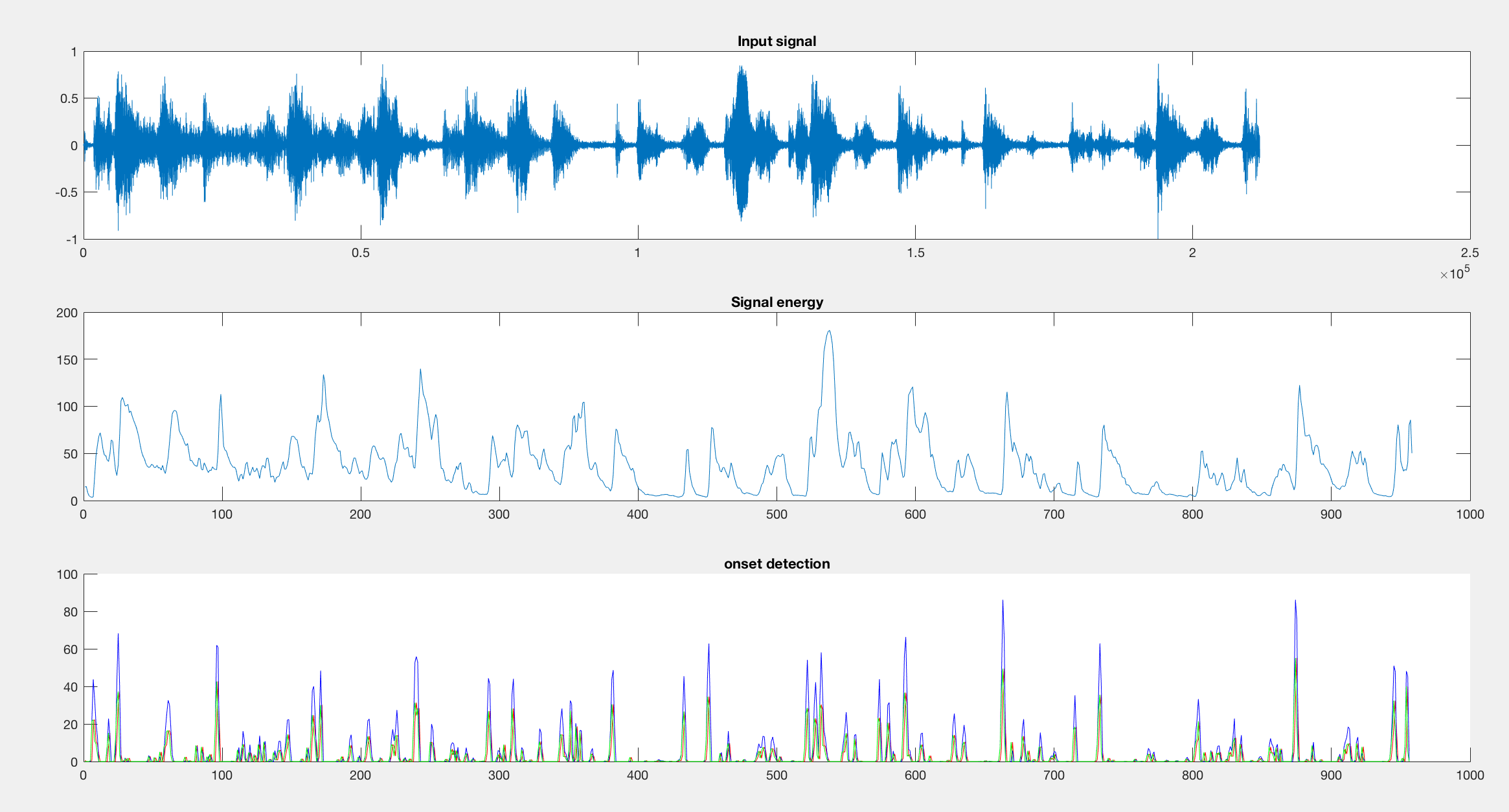

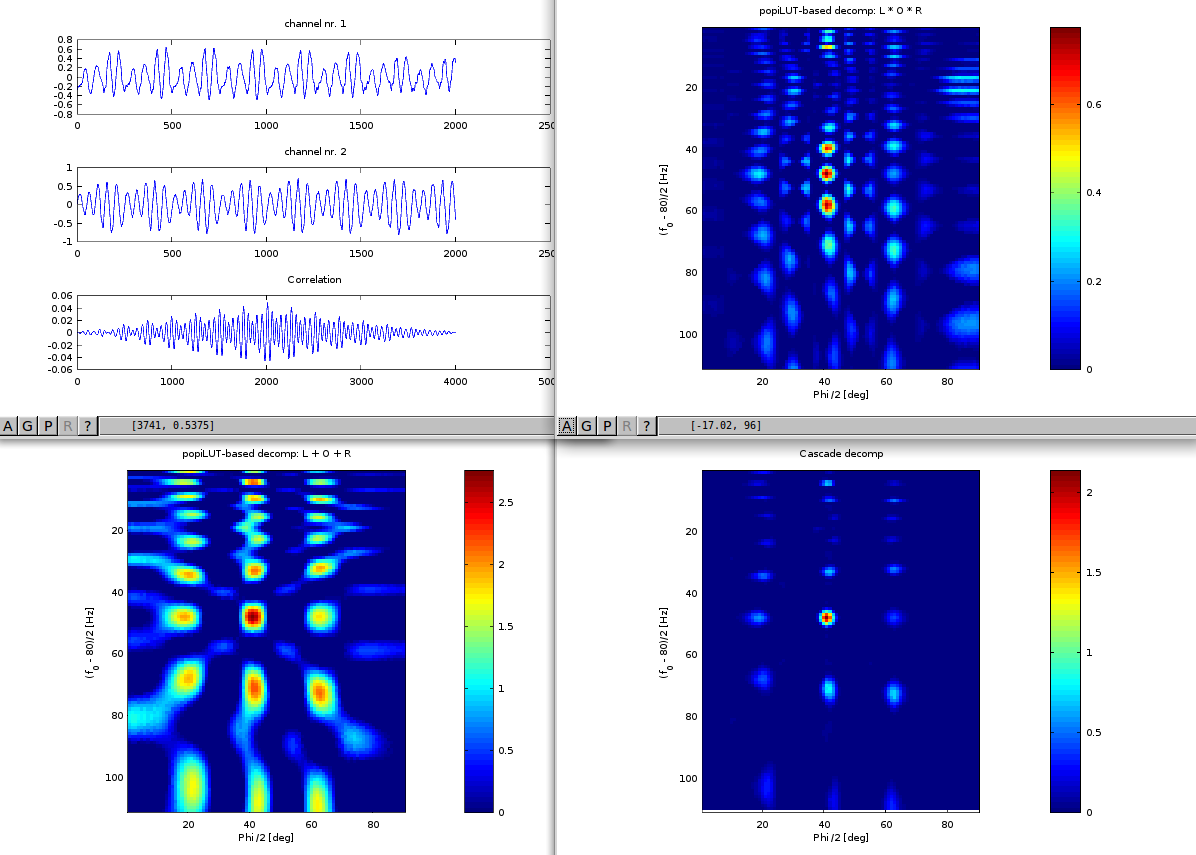

Below: signal amplitude, signal envelope, estimated signal onset.

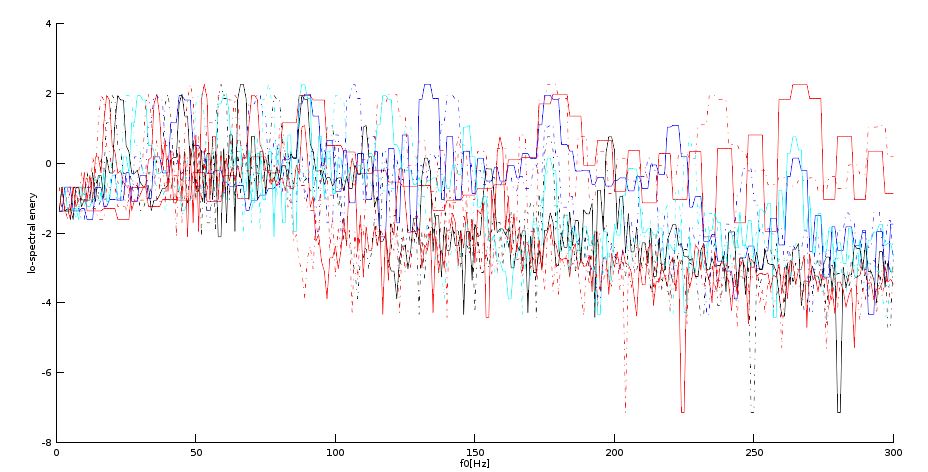

Below:

R: bpm estimated from the first 3 seconds of signal,

G: bpm estimated from the second 3 seconds of signal,

B: bpm estimated from the third 3 seconds of signal,

Black: Avg. of the three previous estimations.

Remark: There is no real bpm fluctuation over the frames, as the peaks keep their position.

Code here. Please contribute.

Voicing Detector code here.

Single-frame decomposition here.

Theory here.

References:

[1] M. Képesi, L. Weruaga, E. Schofield, “Detailed Multidimensional Analysis of our Acoustical Environment,” Forum Acusticum. Budapest (Hu), September 2005, pp. 2649-2654.

[2] M. Képesi and L. Weruaga, “High-resolution noise-robust spectral-based pitch estimation,” Interspeech 2005, pp. 313-316, Lisboa (P), Sep. 2005

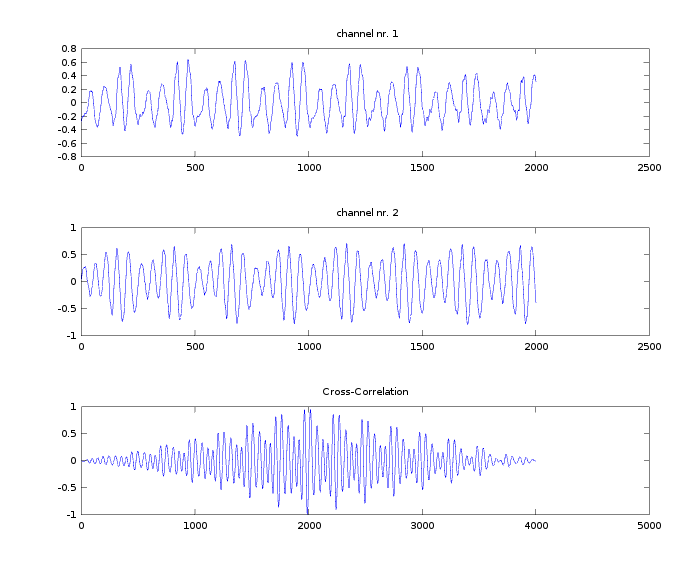

Sources in space and time

In audio signal processing, we observe signals by sampling air pressure at one point in space (by using one microphone), over time.

However, we could also sample the signal over space in one point of time using one sampling period only (but thousands of microphones).

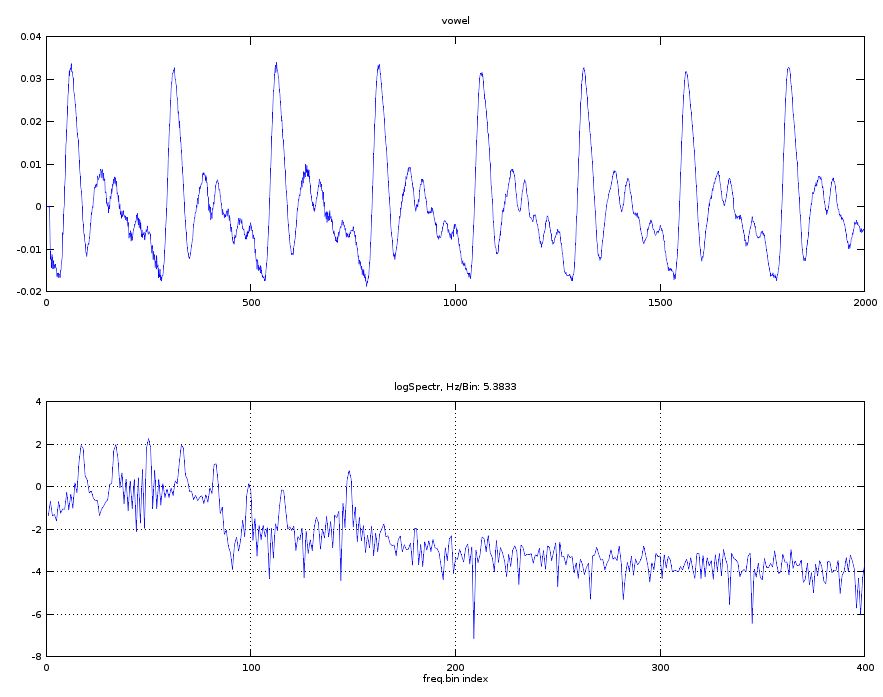

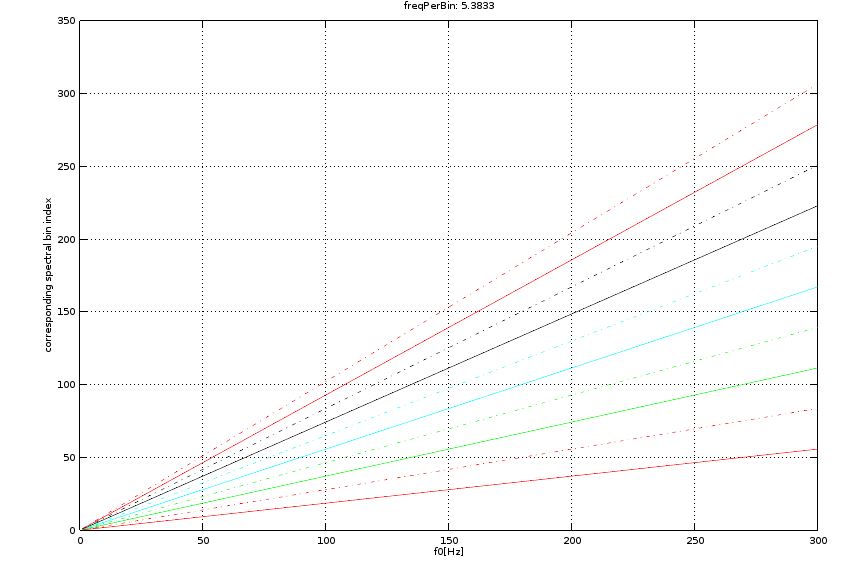

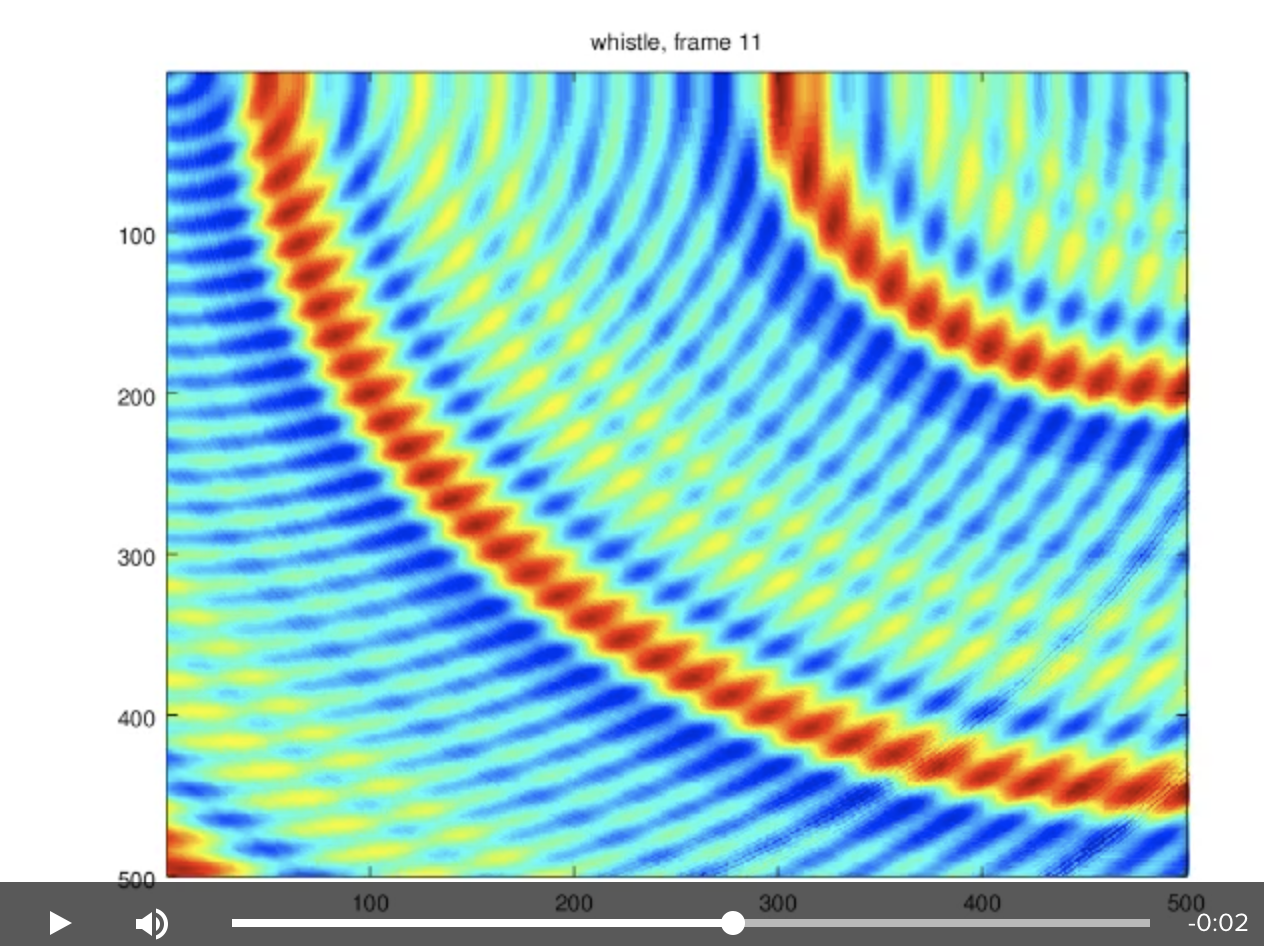

The aim of this experiment is to see the difference between the two approaches having a simple experiment: analysing a frame of 2 superposed acoustic signals: a whistle and a vowel.

The Code is initialised at gitHub.

Click on the image above to see the short video. Feel free to extend the Code.

HChT code here: https://github.com/covarep/covarep

Book Chapters on HChT:

chapters 7.10.2 -theory, 7.11.4 -signal examples, B.5.12 -code.

https://link.springer.com/book/10.1007%2F978-981-10-2534-1